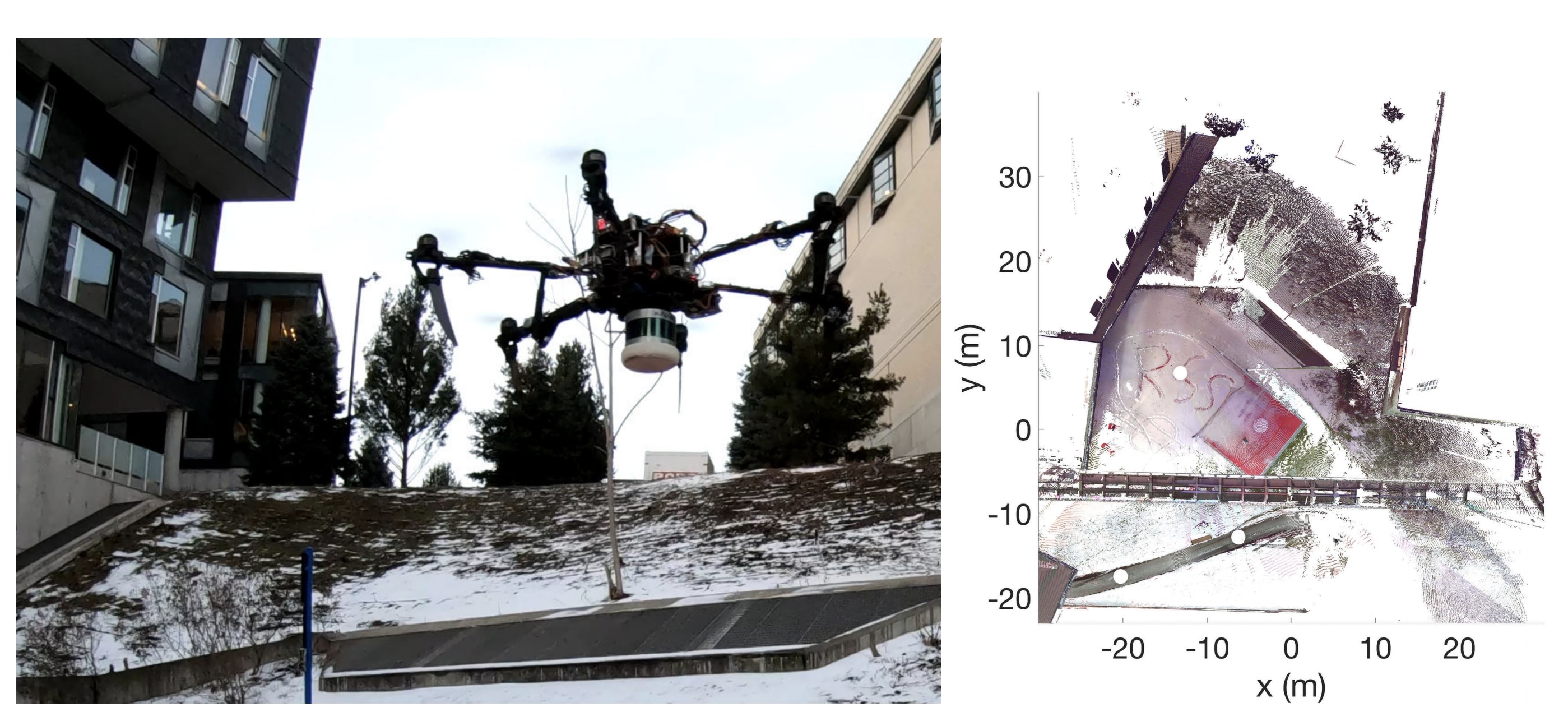

Unified Spherical Frontend: Learning Rotation-Equivariant Representations

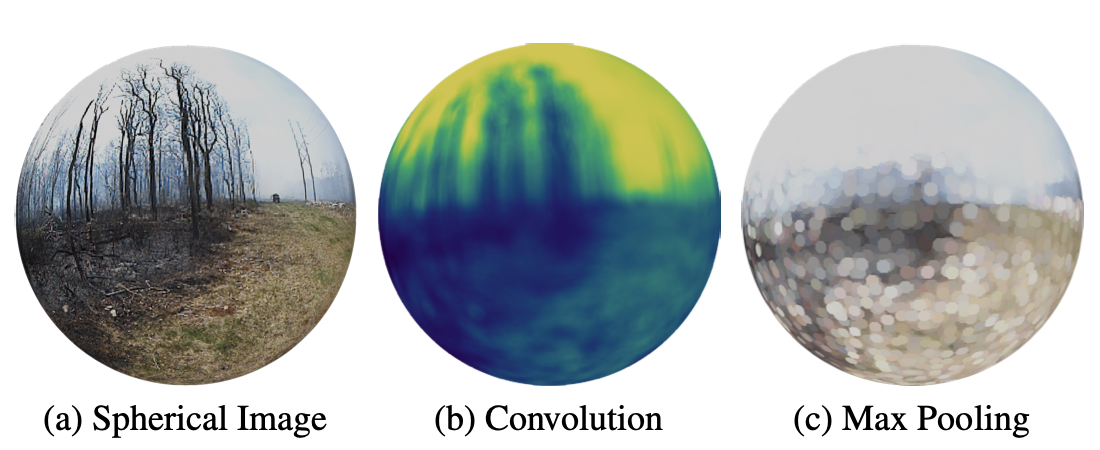

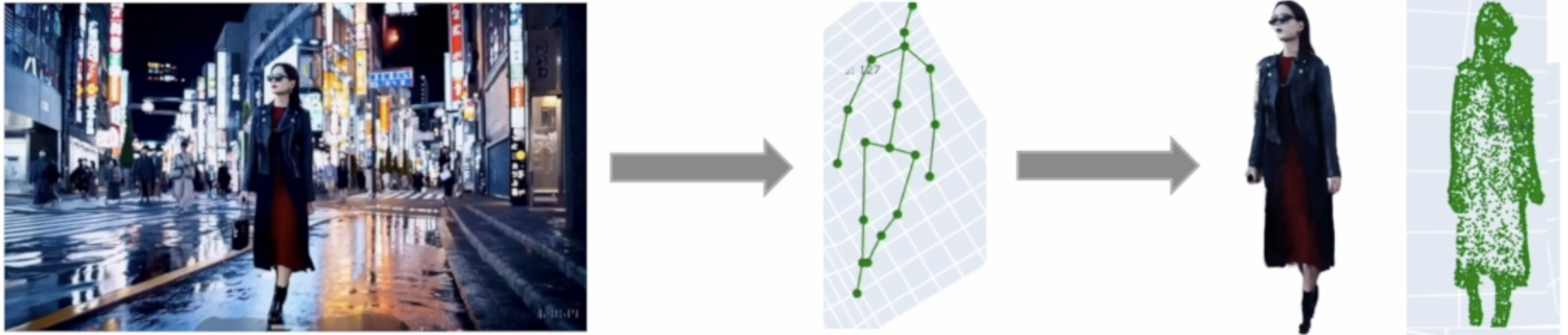

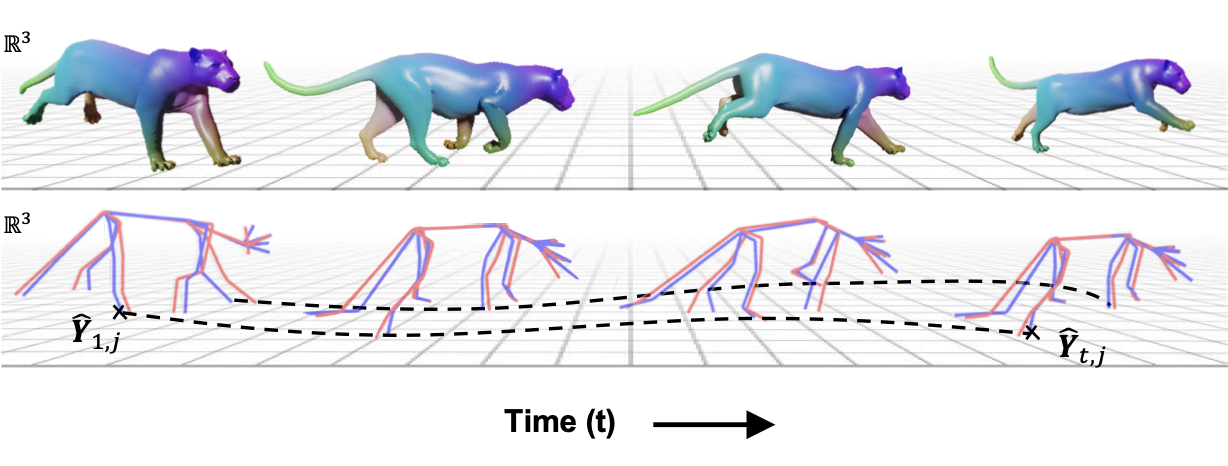

A sensor-agnostic vision frontend that maps any camera geometry: fisheye, pinhole, or 360° into a unified spherical representation. This architectural invariance enables perception systems to deploy across diverse hardware platforms without retraining.